To explore the behavior of a neural network with quantized

convolution layers, use the Deep Network Quantizer app. This example quantizes

the learnable parameters of the convolution layers of the squeezenet

neural network after retraining the network to classify new images according to the Train Deep Learning Network to Classify New Images example.

Load the network to quantize into the base workspace.

net =

DAGNetwork with properties:

Layers: [68x1 nnet.cnn.layer.Layer]

Connections: [75x2 table]

InputNames: {'data'}

OutputNames: {'new_classoutput'}Define calibration and validation data.

The app uses calibration data to exercise the network and collect the dynamic ranges

of the weights and biases in the convolution and fully connected layers of the network

and the dynamic ranges of the activations in all layers of the network. For the best

quantization results, the calibration data must be representative of inputs to the

network.

The app uses the validation data to test the network after quantization to

understand the effects of the limited range and precision of the quantized learnable

parameters of the convolution layers in the network.

In this example, use the images in the MerchData data set. Define

an augmentedImageDatastore object to resize the data for the network.

Then, split the data into calibration and validation data sets.

At the MATLAB command prompt, open the app.

In the app, click the New button. The app verifies your

execution environment. To use the Deep Network Quantizer app, you must have a

GPU execution environment. If there is no GPU available, this step produces an

error.

In the dialog, select the network to quantize from the base workspace.

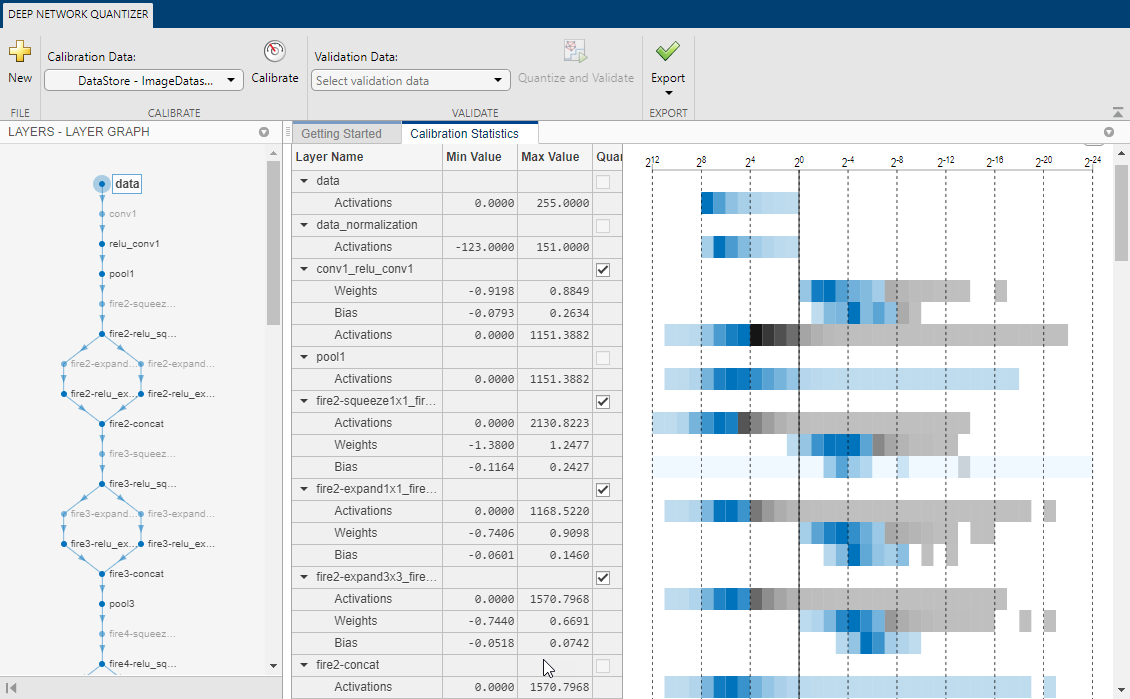

After selecting the network, the app displays the layer graph of the network.

In the Calibrate section of the toolstrip, under

Calibration Data, select the

augmentedImageDatastore object from the base workspace containing the

calibration data, calData.

Click Calibrate.

The Deep Network Quantizer uses the calibration data to exercise the

network and collect range information for the learnable parameters in the network

layers.

When the calibration is complete, the app displays a table containing the weights

and biases in the convolution and fully connected layers of the network and the dynamic

ranges of the activations in all layers of the network and their minimum and maximum

values during the calibration. To the right of the table, the app displays histograms of

the dynamic ranges of the parameters. The gray regions of the histograms indicate data

that cannot be represented by the quantized representation. For more information on how

to interpret these histograms, see Quantization of Deep Neural Networks.

In the Quantize column of the table, indicate whether to

quantize the learnable parameters in the layer. Layers that are not convolution layers

cannot be quantized, and therefore cannot be selected. Layers that are not quantized

remain in single-precision after quantization.

In the Validate section of the toolstrip, under

Validation Data, select the

augmentedImageDatastore object from the base workspace containing the

validation data, valData.

Click Quantize and Validate.

The Deep Network Quantizer quantizes the weights, activations, and biases

of convolution layers in the network to scaled 8-bit integer data types and uses the

validation data to exercise the network. The app determines a metric function to use for

the validation based on the type of network that is being quantized.

| Type of Network | Metric Function |

|---|

| Classification | Top-1 Accuracy – Accuracy of the

network |

| Regression | MSE – Mean squared error of the

network |

When the validation is complete, the app displays the results of the validation, including:

Metric function used for validation

Result of the metric function before and after quantization

Memory requirement of the network before and after quantization (MB)

After quantizing and validating the network, you can choose to export the quantized

network.

Click the Export button. In the drop down, select

Export Quantizer to create a dlquantizer

object in the base workspace. To open the GPU Coder app and generate GPU code

from the quantized neural network, select Generate Code.

Generating GPU code requires a GPU Coder license.

If the performance of the quantized network is not satisfactory, you can choose to

not quantize some layers by deselecting the layer in the table. To see the effects,

click Quantize and Validate again.